Backtesting

Results

View Backtest Results

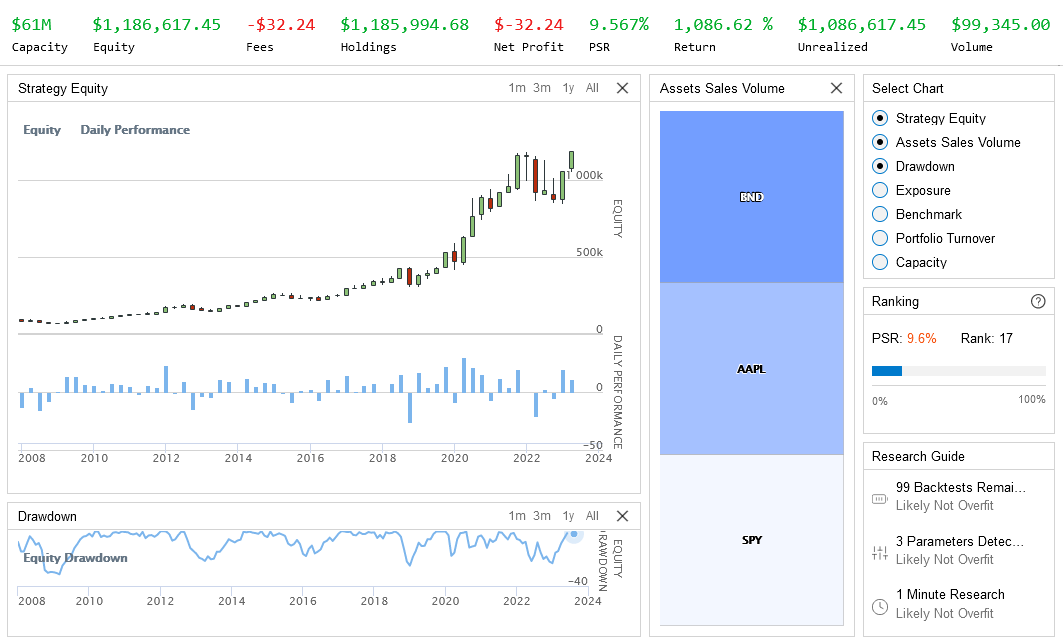

The backtest results page automatically displays when you deploy a backtest. The backtest results page presents the equity curve, trades, logs, performance statistics, and much more information.

The content in the backtest results page updates as your backtest executes. You can close Local Platform without interrupting the backtest as long as you keep Docker running. If you close the page, to open it again, view all of the project's backtests. Only you can view the results of local backtests. If you run the backtest in QuantConnect Cloud, only you can view its results unless you explicitly make the backtest public. If you delete a backtest result or you are inactive for 12 months, we archive your backtest results.

The information on the backtest results page is also available in its raw form. To access it, see View Result Files.

Runtime Statistics

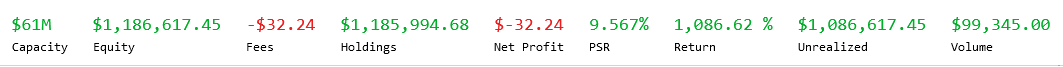

The banner at the top of the backtest results page displays the runtime statistics of your backtest.

The following table describes the default runtime statistics:

| Statistic | Description |

|---|---|

| Equity | The total portfolio value if all of the holdings were sold at current market rates. |

| Fees | The total quantity of fees paid for all the transactions. |

| Holdings | The absolute sum of the items in the portfolio. |

| Net Profit | The dollar-value return across the entire trading period. |

| PSR | The probability that the estimated Sharpe ratio of an algorithm is greater than a benchmark (1). |

| Return | The rate of return across the entire trading period. |

| Unrealized | The amount of profit a portfolio would capture if it liquidated all open positions and paid the fees for transacting and crossing the spread. |

| Volume | The total value of assets traded for all of an algorithm's transactions. |

To view the runtime statistics data in JSON format, open the <organizationWorkspace> / <projectName> / backtests / <unixTimestamp> / <algorithmId>.json file and search for the RuntimeStatistics key.

To add a custom runtime statistic, see Add Statistics.

Built-in Charts

The backtest results page displays a set of built-in charts to help you analyze the performance of your algorithm. The following table describes the charts displayed on the page:

| Chart | Description |

|---|---|

| Strategy Equity | A time series of equity and periodic returns. |

| Capacity | A time series of strategy capacity snapshots. |

| Drawdown | A time series of equity peak-to-trough value. |

| Benchmark | A time series of the benchmark closing price (SPY, by default). |

| Exposure | A time series of long and short exposure ratios. |

| Assets Sales Volume | A chart showing the proportion of total volume for each traded security. |

| Portfolio Turnover | A time series of the portfolio turnover rate. |

| Portfolio Margin | A stacked area chart of the portfolio margin usage. For more information about this chart, see Portfolio Margin Plots. |

| Performance | Time series of various performance metrics. For more information about this chart, see Performance Chart. |

To view the chart data in JSON format, open the <organizationWorkspace> / <projectName> / backtests / <unixTimestamp> / <algorithmId>.json file and search for the Charts key.

Performance Chart

Performance chart displays series of metrics to help you analyze the computational performance of your algorithm for code optimization purposes. The following table describes the series displayed on the chart:

| Series | Description |

|---|---|

| CPU | Total CPU usage as a percentage. |

| ManagedRAM | RAM used on the machine. |

| TotalRAM | Amount of private memory allocated for the current process (includes both managed and unmanaged memory). |

| ActiveSecurities | The number of active securities. An active security is a security that is currently selected by the universe or has holdings or open orders. |

| DataPoints | The number of data points processed per second. |

| HistoryDataPoints | The number of data points of algorithm history provider. |

| Subscriptions | The total execution time reading data subscriptions, measured in seconds, recorded since the last sampling event. |

| Selection | The total execution time adding and removing securities of universe selection, measured in seconds, recorded since the last sampling event. It includes the time spent in universe selection functions. |

| Slice | The total creation time of a time slice, measured in seconds, recorded since the last sampling event. The Slice object contains the data used to update the algorithm state. |

| Schedule | The total execution time of scheduled events, measured in seconds, recorded since the last sampling event. |

| Consolidators | The total execution time of data consolidation events, measured in seconds, recorded since the last sampling event. It includes the time spent updating indicators and executing consolidator handlers. |

| Securities | The total execution time of security updates, measured in seconds, recorded since the last sampling event. It includes the time spent in security change events and symbol change events. |

| Transactions | The total execution time of order events, measured in seconds, recorded since the last sampling event. For example, processing order fills, cancellations, and updates such as moving trailing stops. It includes the time spent in order events handlers. |

| SplitsDividendsDelisting | The total execution time of corporate action events, measured in seconds, recorded since the last sampling event. |

| OnData | The total execution time of OnDataon_data method call and Alpha.Updatealpha.update, measured in seconds, recorded since the last sampling event. |

The Performance chart is disabled by default. To enable this chart, set the Settings.PerformanceSamplePeriod propertyself.settings.performance_sample_period attribute to the desired sampling period:

Settings.PerformanceSamplePeriod = TimeSpan.FromDays(7);

self.settings.performance_sample_period = timedelta(7)

Custom Charts

The results page shows the custom charts that you create.

Supported Chart Types

We support the following types of charts:

If you use SeriesType.Candle and plot enough values, the plot displays candlesticks. However, the Plotplot method only accepts one numerical value per time step, so you can't plot candles that represent the open, high, low, and close values of each bar in your algorithm. The charting software automatically groups the data points you provide to create the candlesticks, so you can't control the period of time that each candlestick represents.

To create other types of charts, save the plot data in the Object Store and then load it into the Research Environment. In the Research Environment, you can create other types of charts with third-party charting packages.

Supported Markers

When you create scatter plots, you can set a marker symbol. We support the following marker symbols:

Chart Quotas

If you execute backtests in QuantConnect Cloud, see Custom Charts for more information about the charting quotas.

If you execute local backtests, the charting quotas are set by the maximum-chart-series and maximum-data-points-per-chart-series configuration settings.

Demonstration

For more information about creating custom charts, see Charting.

Adjust Charts

You can manipulate the charts displayed on the backtest results page.

Toggle Charts

To display and hide a chart on the backtest results page, in the Select Chart section, click the name of a chart.

Toggle Chart Series

To display and hide a series on a chart on the backtest results page, click the name of a series at the top of a chart.

Adjust the Display Period

To zoom in and out of a time series chart on the backtest results page, perform either of the following actions:

- Click the , , , or period in the top-right corner of the chart.

- Click a point on the chart and drag your mouse horizontally to highlight a specific period of time in the chart.

If you adjust the zoom on a chart, it affects all of the charts.

After you zoom in on a chart, slide the horizontal bar at the bottom of the chart to adjust the time frame that displays.

Resize Charts

To resize a chart on the backtest results page, hover over the bottom-right corner of the chart. When the resize cursor appears, hold the left mouse button and then drag to the desired size.

Move Charts

To move a chart on the backtest results page, click, hold, and drag the chart title.

Refresh Charts

Refreshing the charts on the backtest results page resets the zoom level on all the charts. If you refresh the charts while your algorithm is executing, only the data that was seen by the Lean engine after you refreshed the charts is displayed. To refresh the charts, in the Select Chart section, click the reset icon.

Key Statistics

The backtest results page displays many key statistics to help you analyze the performance of your algorithm.

Overall Statistics

The Overview tab on the backtest results page displays tables for Overall Statistics and Rolling Statistics. The Overall Statistics table displays the following statistics:

- Probabilistic Sharpe Ratio (PSR)

- Total Trades

- Average Loss

- Drawdown

- Net Profit

- Loss Rate

- Profit-Loss Ratio

- Beta

- Annual Variance

- Tracking Error

- Total Fees

- Lowest Capacity Asset

- Sharpe Ratio

- Average Win

- Compounding Annual Return

- Expectancy

- Win Rate

- Alpha

- Annual Standard Deviation

- Information Ratio

- Treynor Ratio

- Estimated Strategy Capacity

Some of the preceding statistics are sampled throughout the backtest to produce a time series of rolling statistics. The time series are displayed in the Rolling Statistics table.

To view the data from the Overall Statistics and Rolling Statistics tables in JSON format, open the <organizationWorkspace> / <projectName> / backtests / <unixTimestamp> / <algorithmId>.json file.

Research Guide

For information about the Research Guide, see Research Guide.

Reports

Backtest reports provide a summary of your algorithm's performance during the backtest period. Follow these steps to generate one:

- Open the backtest results page for which you want to generate a report.

- Click the Report tab.

- If the project doesn't have a description, enter one and then click .

- Click .

- If the IDE says that the report is being generated, repeat step 4.

The report may take a minute to generate.

If you create a report for a local backtest, the report is stored in the <organizationWorkspace> / <projectName> / backtests / <unixTimestamp> directory as report.html and report.pdf.

Customize the Report HTML

The Report / template.html file in the LEAN GitHub repository defines the stucture of the reports you generate. To override the HTML file, add a report.html file to your project. To include some of the information and charts that are in the default report, use the report keys in the Report / ReportKey.cs file in the LEAN GitHub repository. For example, to add the Sharpe ratio of your backtest to the custom HTML file, use {{$KPI-SHARPE}}.

To include the crisis event plots in your report, add the {{$HTML-CRISIS-PLOTS}} key and then define the structure of the individual plots inside of <!--crisis and crisis-->. Inside of this comment, you can utilize the {{$TEXT-CRISIS-TITLE}} and {{$PLOT-CRISIS-CONTENT}} keys. For example, the following HTML is the default format for each crisis plot:

<!--crisis

<div class="col-xs-4">

<table class="crisis-chart table compact">

<thead>

<tr>

<th style="display: block; height: 75px;">{{$TEXT-CRISIS-TITLE}}</th>

</tr>

</thead>

<tbody>

<tr>

<td style="padding:0;">

<img src="{{$PLOT-CRISIS-CONTENT}}">

</td>

</tr>

</tbody>

</table>

</div>

crisis-->

To include the algorithm parameters in your report, add the {{$PARAMETERS}} key and then define the HTML element inside of <!--parameters and parameters-->. Inside of this comment, you can use special keys {{$KEY<parameterIndex>}} and {{$VALUE<parameterIndex>}}, which represent the key and value of a single parameter. For example, the following HTML is the default format for the parameters element:

<!--parameters

<tr>

<td class = "title"> {{$KEY0}} </td><td> {{$VALUE0}} </td>

<td class = "title"> {{$KEY1}} </td><td> {{$VALUE1}} </td>

</tr>

parameters-->

In the preceding example, {{$KEY0}} is the name of the first parameter in the algorithm and {{$VALUE0}} is its value.

Customize the Report CSS

The Report / css / report.css file in the LEAN GitHub repository defines the style of the reports you generate. To override the stylesheet, add a report.css file to your project.

Custom Report Example

To view an example of report.html and report.css files that customize the backtest reports of a project, see the files in this project. The HTML and CSS files in the project produce a report that has a red banner at the top.

Orders

The backtest results page displays the orders of your algorithm and you can view them on your local machine.

View in the GUI

To see the orders that your algorithm created, open the backtest results page and then click the Orders tab. If there are more than 10 orders, use the pagination tools at the bottom of the Orders Summary table to see all of the orders. Click on an individual order in the Orders Summary table to reveal all of the order events, which include:

- Submissions

- Fills

- Partial fills

- Updates

- Cancellations

- Option contract exercises and expiration

The timestamps in the Order Summary table are based in Eastern Time (ET).

Access the Order Summary CSV

To view the orders data in CSV format, open the backtest results page, click the Orders tab, and then click . The content of the CSV file is the content displayed in the Orders Summary table when the table rows are collapsed. The timestamps in the CSV file are based in Coordinated Universal Time (UTC). If you download the order summary CSV for a local backtest, the file is stored in <organizationWorkspace> / <projectName> / backtests / <unixTimestamp> / orders.csv.

Access the Orders JSON

To view all of the content in the Orders Summary table, open the <organizationWorkspace> / <projectName> / backtests / <unixTimestamp> / <algorithmId>.json file and search for the Orders key.

Access the Order Events JSON

To view all of the order events for a local backtest, open the <organizationWorkspace> / <projectName> / backtests / <unixTimestamp> / <algorithmId>-order-events.json file.

Access in Jupyter Notebooks

To programmatically analyze orders, call the ReadBacktestOrdersread_backtest_orders method or the /backtests/orders/read endpoint. This method and endpoint only work if you deploy the algorithm in QC Cloud.

Insights

The backtest results page displays the insights of your algorithm and you can view the raw insight data on your local machine.

View in the GUI

To see the insights your algorithm emit, open the backtest result page and then click the Insights tab. If there are more than 10 insights, use the pagination tools at the bottom of the Insights Summary table to see all of the insights. The timestamps in the Insights Summary table are based in Eastern Time (ET).

Open Raw JSON

To view the insights in JSON format, open the backtest result page, click the Insights tab, and then click . The timestamps in the CSV file are based in Coordinated Universal Time (UTC).

If you run a local backtest, the JSON file is also available in the <organizationWorkspace> / <projectName> / backtests / <unixTimestamp> / <algorithmId>-alpha-insights.json file.

Logs

The backtest results page displays the logs of your backtest and you can view them on your local machine. The timestamps of the statements in the log file are based in your algorithm time zone.

View in the GUI

To see the log file that was created throughout a backtest, open the backtest result page and then click the Logs tab.

To filter the logs, enter a search string in the Filter logs field.

Download Log Files

To download the log file that was created throughout a backtest, follow these steps:

- Open the backtest result page.

- Click the Logs tab.

- Click .

If you ran a local backtest, the log file is automatically saved on your local machine when the backtest completes.

Access Local Log Files

To view the log file of a local backtest, open the <organizationWorkspace> / <projectName> / backtests / <unixTimestamp> / <algorithmId>-log.txt file.

Project Files

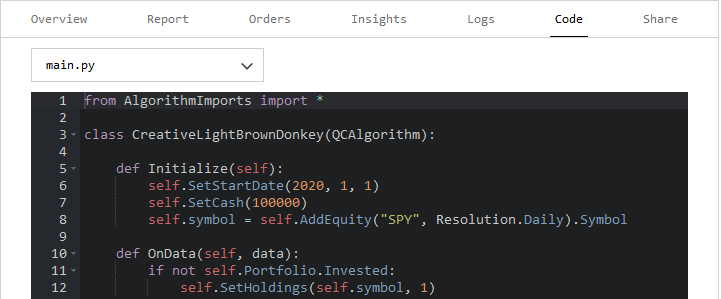

The backtest results page displays the project files used to run the backtest. To view the files, click the Code tab. By default, the main.pyMain.cs file displays. To view other files in the project, click the file name and then select a different file from the drop-down menu.

If you ran a local backtest, the project files are also available in the <organizationWorkspace> / <projectName> / backtests / <unixTimestamp> / code directory.

View Result Files

To view the results files of local backtests, run a local backtest and then open the <organizationWorkspace> / <projectName> / backtests / <unixTimestamp> directory. The following table describes the initial contents of the backtest result directories:

| File/Directory | Description |

|---|---|

| code / | A directory containing a copy of the files that were in the project when you ran the backtest. |

|

<backtestId>-alpha-results.json

Ex: 1967791529-alpha-results.json | A file containing all of the backtest insights. This file only exists if you emit insights during the backtest. |

|

<backtestId>-log.txt

Ex: 1967791529-log.txt | A file containing all of the backtest logs. |

|

<backtestId>-order-events.json

Ex: 1967791529-order-events.json | A file containing all of the backtest order events. |

|

<backtestId>.json

Ex: 1967791529.json |

A file containing the following data:

|

| config | A file containing some configuration settings, including the backtest Id, Docker container name, and backtest name. |

|

data-monitor-report-<backtestDate><unixTimestamp>.json

Ex: data-monitor-report-20230614155459950.json | A file containing statistics on the algorithm's data requests. |

|

failed-data-requests-<backtestDate><unixTimestamp>.txt

Ex: failed-data-requests-20230614155451004.txt | A file containing all the local data paths that LEAN failed to load during the backtest. |

| log.txt | A file containing the syslog. |

|

succeeded-data-requests-<backtestDate><unixTimestamp>.txt

Ex: succeeded-data-requests-20230614155451004.txt | A file containing all the local data paths that LEAN successfully loaded during the backtest. |

The backtest result directories can contain the following additional files if you request them:

| File | Description | Request Procedure |

|---|---|---|

| orders.csv | A file containing all of the data from the Orders table when the table rows are collapsed. | See Orders |

| report.html and report.pdf | A file containing the backtest report | See Reports |

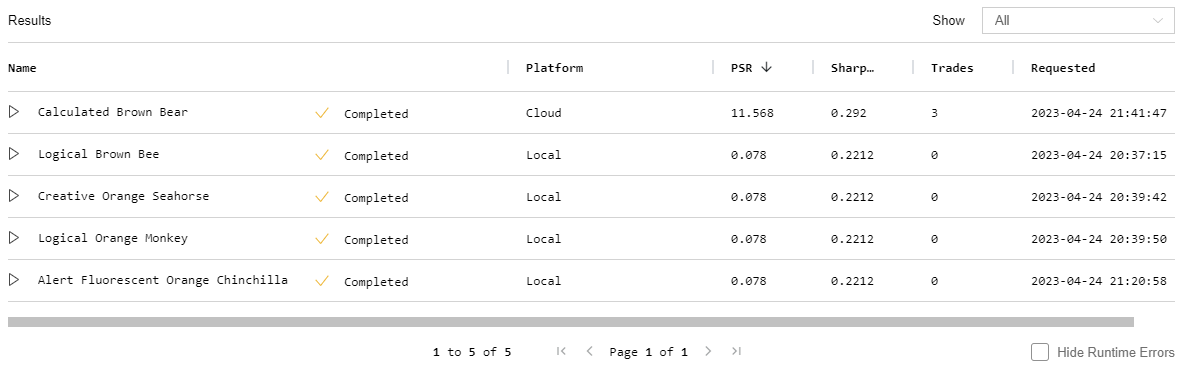

View All Backtests

Follow these steps to view all of the backtests of a project:

- Open the project that contains the backtests you want to view.

- In the top-right corner of the IDE, click the

/

/  Backtest Results icon.

Backtest Results icon. - (Optional) In the top-right corner, select the Show field and then select one of the options from the drop-down menu to filter the table by backtest or optimization results.

- (Optional) In the bottom-right corner, click the Hide Error check box to remove backtest and optimization results from the table that had a runtime error.

- (Optional) Use the pagination tools at the bottom to change the page.

- (Optional) Click a column name to sort the table by that column.

- Click a row in the table to open the results page of that backtest or optimization.

A table containing all of the backtest results for the project is displayed. If there is a play icon to the left of the name, it's a backtest result. If there is a fast-forward icon next to the name, it's an optimization result. The Platform column displays the deployment target of the backtest.

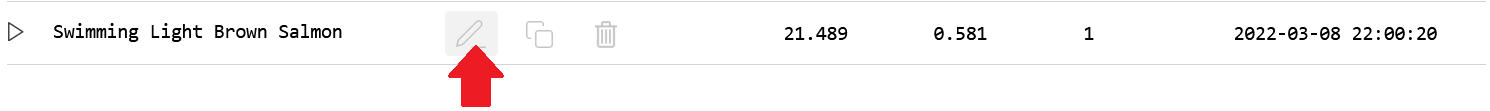

Rename Backtests

We give an arbitrary name (for example, "Smooth Apricot Chicken") to your backtest result files, but you can follow these steps to rename them:

- Hover over the backtest you want to rename and then click the pencil icon that appears.

- Enter the new backtest name and then click .

To programmatically set the backtest name, call the SetNameset_name method.

SetName("Backtest Name"); self.set_name("Backtest Name")

For more information, see Set Name and Tags.

Clone Backtests

Hover over the backtest you want to clone, and then click the clone icon that appears to clone the backtest.

A new project is created with the backtest code files.

Delete Backtests

Hover over the backtest you want to delete, and then click the trash can icon that appears to delete the backtest.